Robotic Hand for Human-like Tasks

This was my main research project through my Master’s under Professor Sangbae Kim (BRL Group) at MIT. Although most research projects are continuations of a previous student’s work, this was a completely new project in an area new to our lab: manipulation.

The project was to build a robotic hand for the home environment in collaboration with Toyota Research Institute and 4 other labs at MIT (Perceptual Science, MCube, CDFG, Improbabl AI). As the only member in our lab working on the project, I had a lot of freedom to explore but the design space of hand design is very complex. Compare to my previous hand project, we wanted far more functionality by adding more DOF while maintaining robustness even with the added complexity.

Problem Statement

The complexity of the challenge stems from the environment robotic hands are trying to be deployed. Currently, most robotic applications in industry (assembly line arms, CNC machines) are position-controlled and avoid contacts but manipulation is built on controlling forces and contacts with objects/environment. Robotic research, on the other hand, is trying to push robots into the home environment. This requires robots safely do human tasks at human speeds while navigating an unstructured environment. Compare that to the clutter-free, human-free, predictable factory settings that the current robots are operating in, there are a lot of challenges to account for the clutter, safety, and object collision.

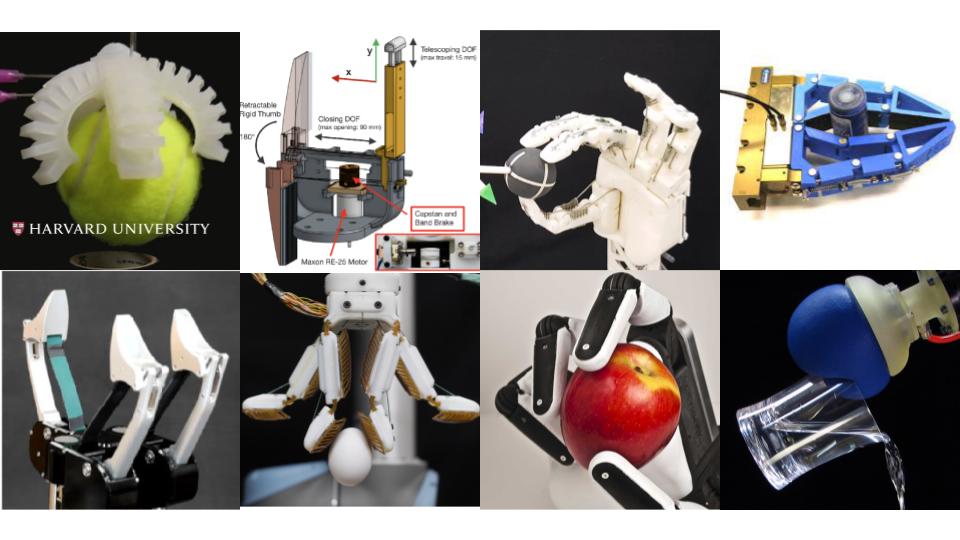

To illustrate the complexity of this design space, below is a snapshot of many robotic hands in the field.

Here are the design axis and variables that map this incredibly complex space:

-

Topology: The number of fingers, degree-of-freedom (DOF) of each finger, and opposability of the fingers determines the grasp types a hand can perform. The more DOF, the more complex it becomes with more moving parts, more difficult wiring routes, and more limited control bandwidth.

-

Geometry: The shape and material of the finger pad change its ability to interact with the environment, the thinner the fingers the more able it is to reach into clutter and tight spaces. The shape of the palm affect the size and types of the object it can grasp.

-

Actuation: The type of motor used (stepper, BLDC, dc with big gearbox) will change the torque-speed curve as well as the rotor inertia (useful in proprioception in collisions). The size and shape affect where it can be mounted (in the palm, at the finger joint, or outside the hand).

-

Joints: The type of joint (revolute, rolling, prismatic) all changes the robustness and complexity of the finger. Deciding if joints are coupled will also change the kinematics and workspace.

-

Transmission: Belt and pulley, direct, tendon-driven, and geared transmission all have their benefit and drawback in terms of elasticity, friction, efficiency, and complexity.

-

Sensorization: What kind of sensor (contact, capacitative, force, proximity) will determine the type of information you can gather and utilize from the environment.

Design Methodology

The way I approached this space was to first fix the topology and geometry and let that dictate the other variables. The goal was to maximize the functionality with the simplest topology. To decide what types of grasping functions the hand should be able to achieve, I went into the human grasp taxonomy literature to use the human hand as a baseline. To decide what types of grasping functions the hand should be able to achieve, I went into the human grasp taxonomy literature to use the human hand as a baseline.

[Click for Taxonomy Details]

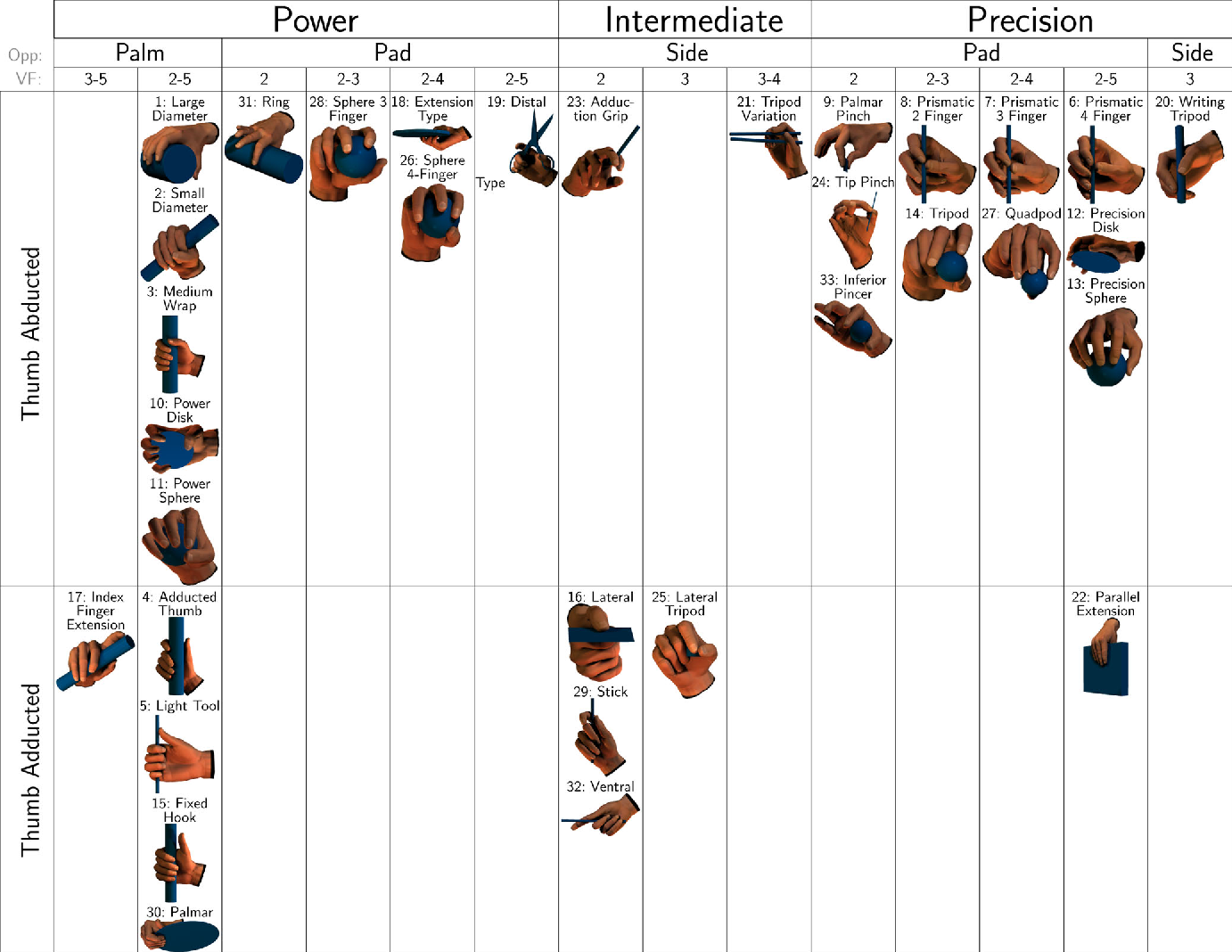

A lot of research has been done to categorize the grasps we do as humans. Looking at this chart, there are 30+ grasps and a major distinction relies on the thumb's opposability.

"The GRASP Taxonomy of Human Grasp Types"(T. Feix, J. Romero, H. Schmiedmayer, A. Dollar, D. Kragic)

The minimum number of point contact to maintain the stability of a grasped object in 3d space is 3, so 3 fingers were chosen to reduce complexity. This also means that certain grasps cannot be achieved. It is infeasible to achieve all 30+, so we looked primarily at the top 10 most used grasps. The fewest degree of freedom was chosen to achieve as much of these grasps as possible.

"Grasp frequency and usage in daily household and machine shop tasks"(I. Bullock, J. Zheng, S. LaRosa, C. Guertler, A. Dollar)

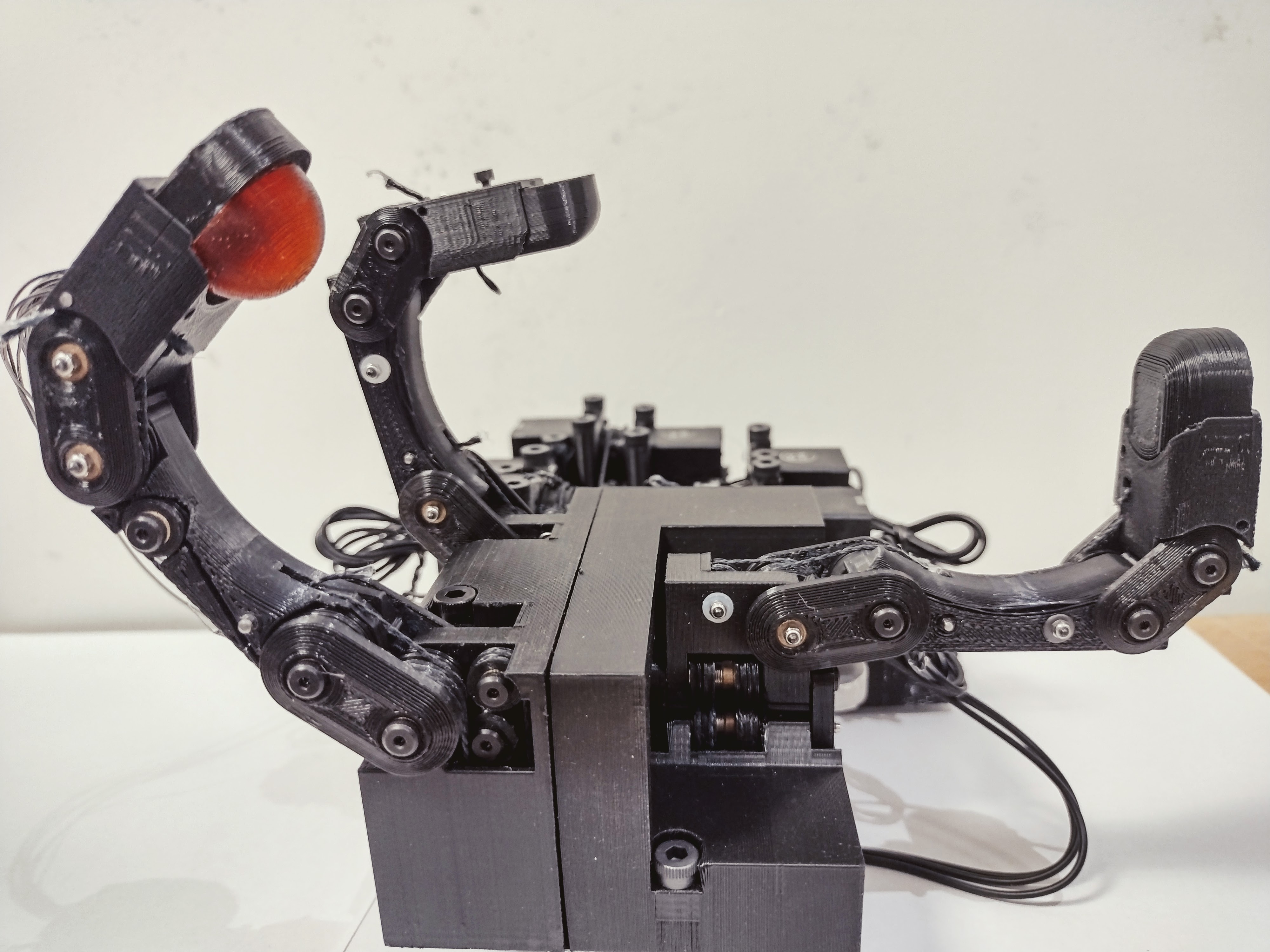

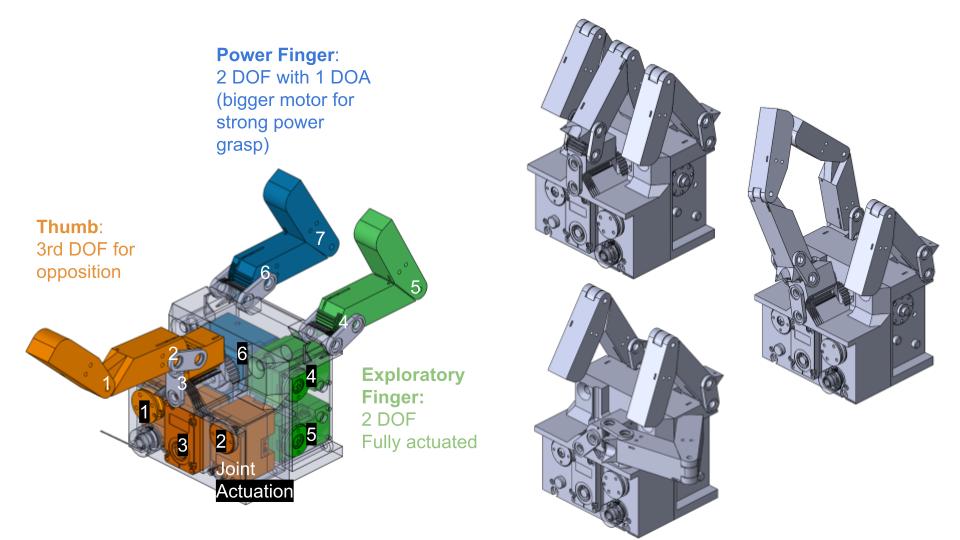

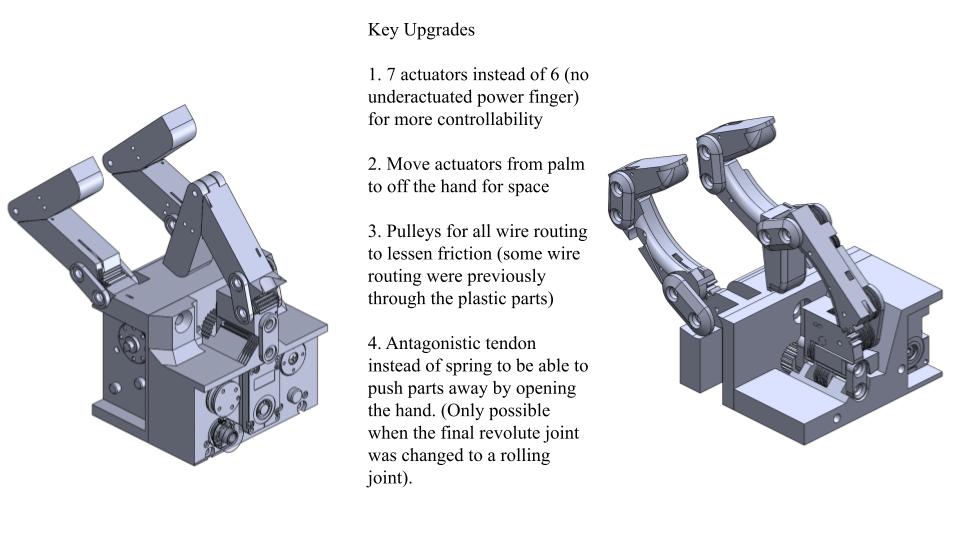

The topology chosen was 7 DOF with 3 fingers including a thumb that allows for finger opposition, this allows for a wide enough array of grasp shapes.

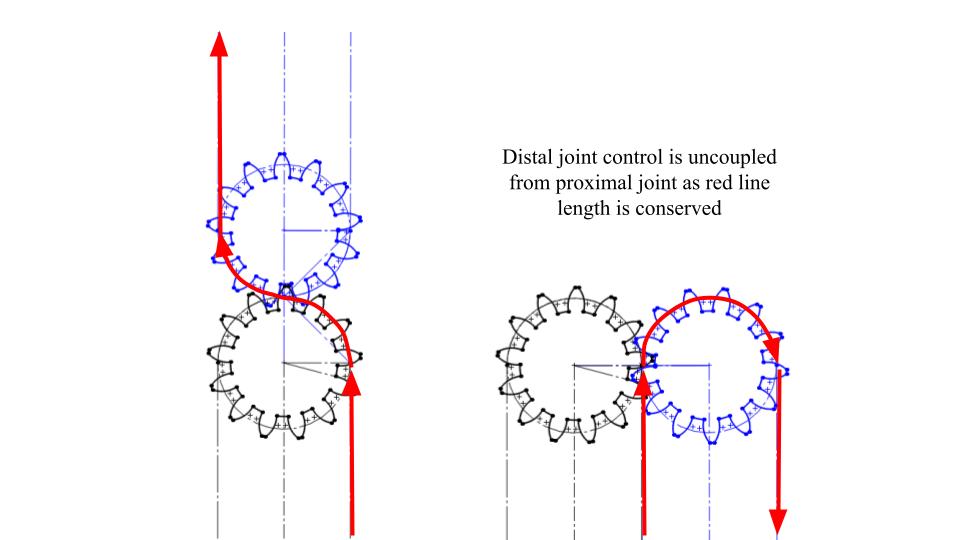

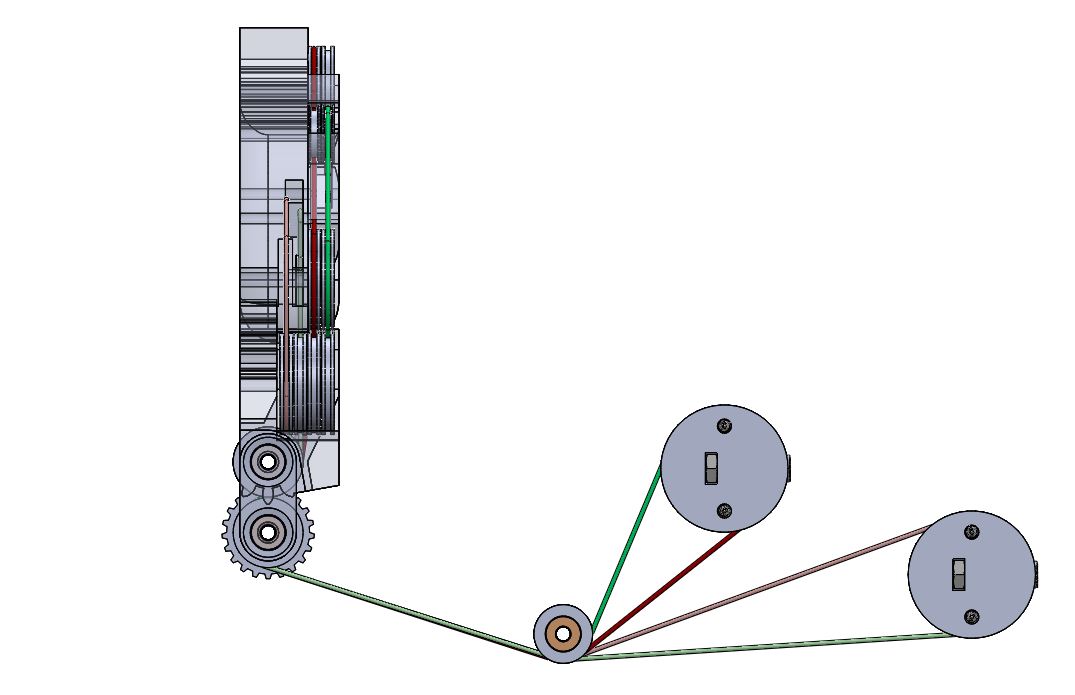

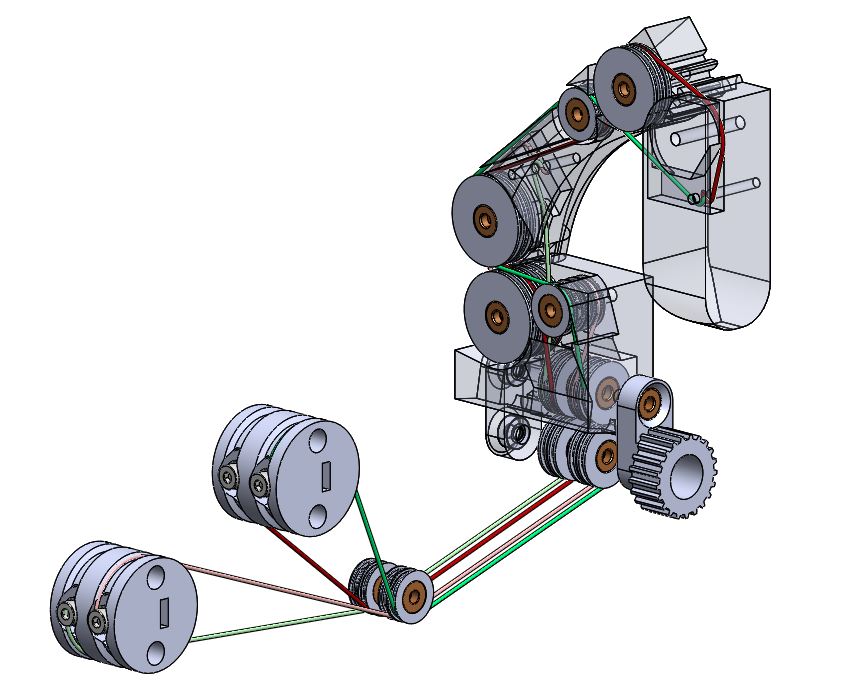

7 Dof with 6 motors (one finger underactuated) would be difficult to have all actuation in the hand, so using tendon transmission allows for remote actuation. Remote actuation also allows for any form factor, a small dynamixel motor was chosen to minimize weight and size. Although a tendon transmission is doable for a revolute joint, a pulley is needed for constant length retraction. The bigger problem is joint coupling, the rolling joint makes it possible to route wires so that distal joints (far from the palm) aren’t coupled to proximal (close to palm) joints.

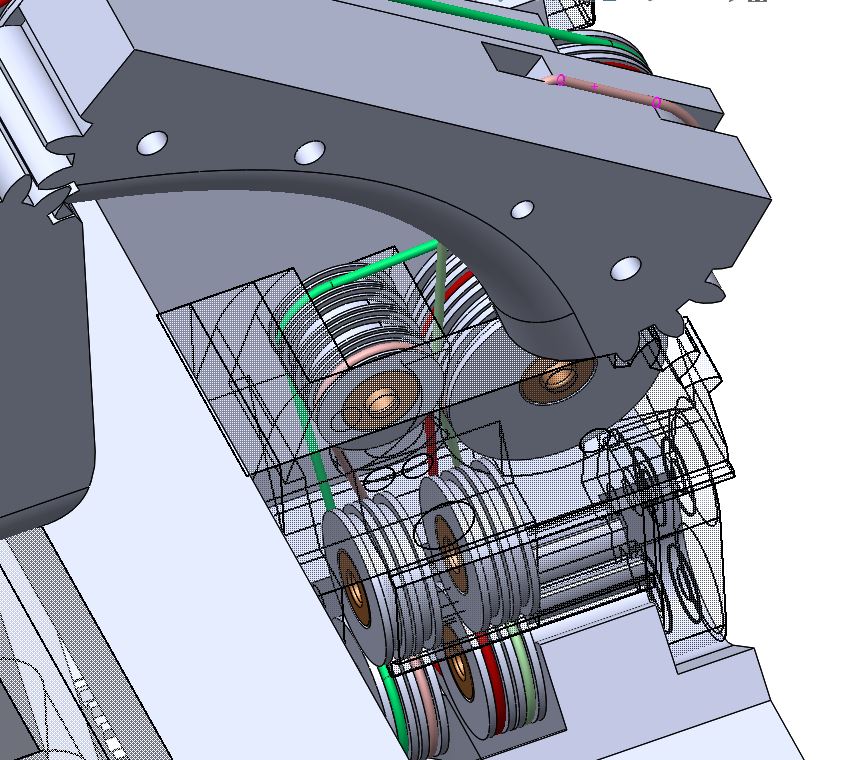

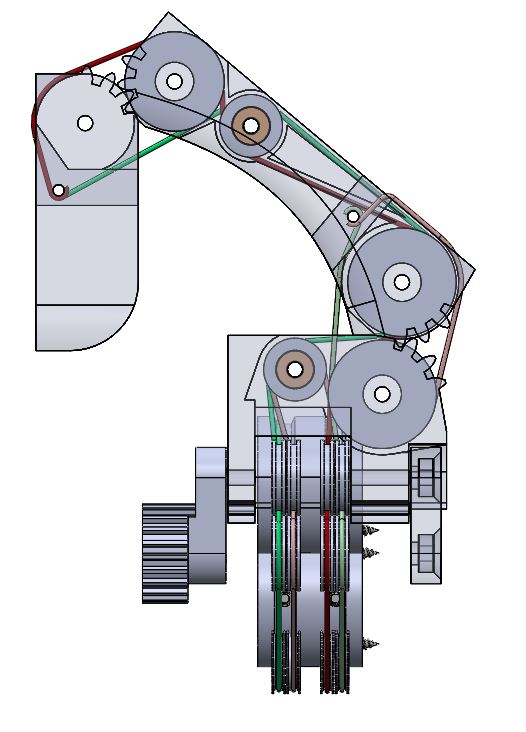

The rolling joint and how it solves the wire coupling problem are shown below.

First Prototype

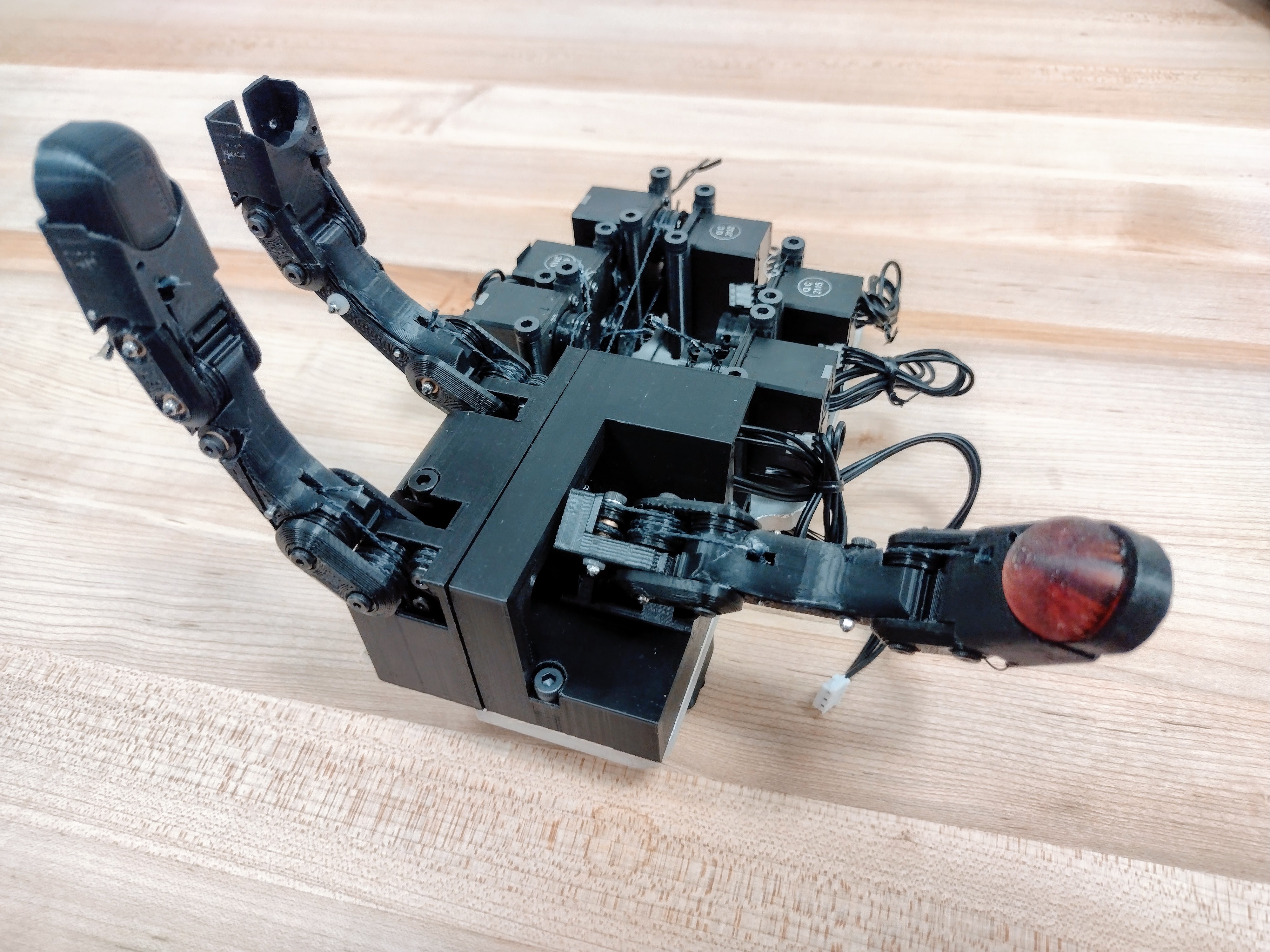

This first version was a good testing ground for a couple of ideas that will be improved upon in future versions. All 6 motors were built into the palm, it was as compact as it could get, so adding another actuator would require expanding off the hand. Each finger has a different number of actuators for better specialization. A spring was used as the antagonistic tendon to restore the hand to the resting position.

A cad of the hand model:

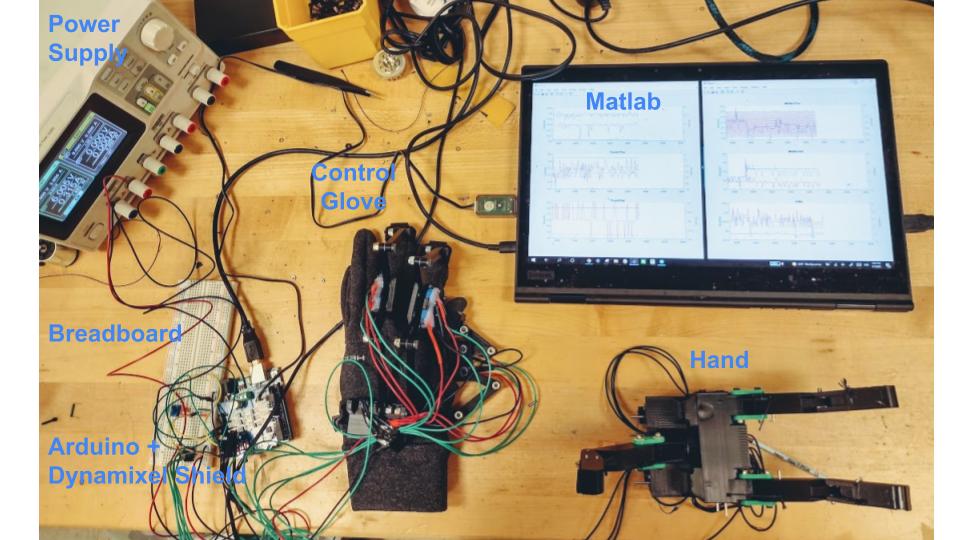

The free motion control uses a control glove through Arduino and logging through Matlab.

The grasping capabilities of the hand.

[Click for Electronics Details]

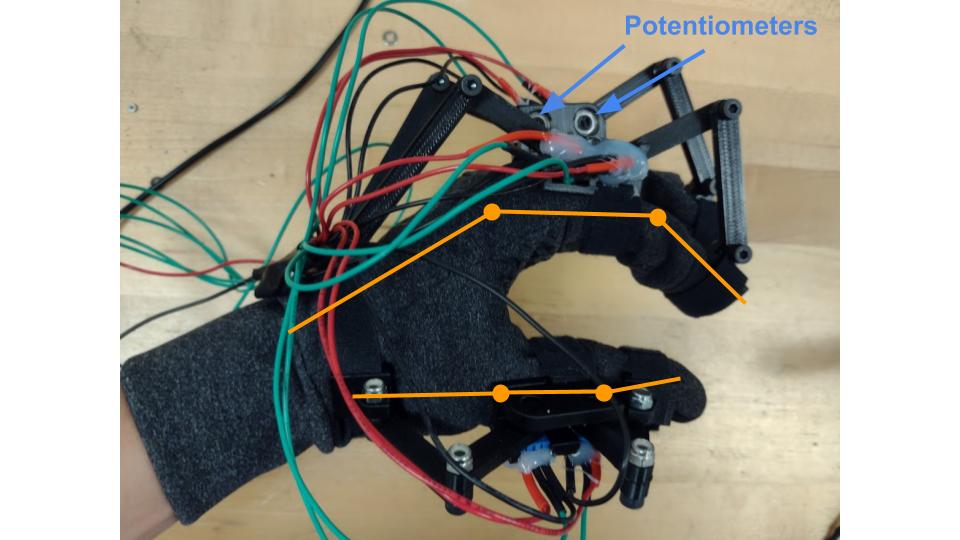

A control glove was designed to map the user's joint command to the hand. The user would move the hand and the potentiometers attached to the arduino will map the joint space of the human hand to that of the robot. The arduino also reports the joint position and current (both commanded and actual) to the computer to be live-plotted in matlab for troubleshooting and data-collection.

Second Prototype

In the second prototype, many changes were implemented from the lessons learned from the first. Physically, it is more reactive and robust with less friction (although that came at the cost of more pulleys and complexity) The bandwidth and speed of the hand increased quite a lot as the electronics were upgraded from an Arduino to an STM microcontroller. In addition, the fingertips are easily exchangeable to fit the new sensors I have designed (see page for more details).

The tendon routing was quite intricate to design, especially for the thumb that has 3 DOF with an abduction joint not in-plane as the distal and proximal phalange. The pictures below show the 2 pairs of wires in the thumb. The red and green wires are the pair for the distal phalange that has to travel through the abduction joint and proximal phalange. The light red and light green wires are the pair for the proximal phalange that only has to travel through the abduction joint.

Below are the videos for the experiments. The hand and arm were controlled using a human operator via teleoperation, the limited dexterity of the hand was more of a function of the user’s (which was me) inability to coordinate that many DOF well without any haptic feedback.

In addition to teleoperation, the hand’s low friction and motor backdrivability have inspired some possible exploration in proprioception. In the test videos below, the hand is sensing the external forces through the current readings at the motor (hard to see in the first video but the left middle graph on the Matlab interface has jolts corresponding to the touches). Using this, a very rough reflex can be created to automatically close when detecting contact (a possible area for more research exploration in the future).